Modifying task settings

For each task, you can modify the settings according to your needs.

To open the Settings window:

- Click the Manage button in the bottom left of the DATA WAREHOUSE panel.

- Select a task in the left panel.

- Click the Settings toolbar button.

A window opens displaying the following tabs: General, Advanced, and Consolidation.

General Tab

In the General tab, the following settings are available:

- Log level: Select the log level granularity, which can be any of the following:

- INFO (default) - Logs informational messages that highlight the progress of the ETL process at a coarse-grained level.

- VERBOSE - Logs fine-grained informational events that are most useful to debug the ETL process.

TRACE - Logs finer-grained informational events than the VERBOSE level.

The log levels VERBOSE and TRACE impact performance. Therefore, you should only select them for troubleshooting if advised by Qlik Support.

-

Default History Resolution: Choose the granularity of the "From Date" column value when a new history record is inserted:

-

Minutes to update with the date and time. This is the default. When this option is selected, a new record will be inserted each time the data is updated.

-

Days to update with the date only. When this option is selected, only one record (the most recently updated) will be inserted at the end of the day.

Information noteThese settings will be applied, regardless of the original source column (when mapped) or Change Table [header__] timestamp column (when not mapped) granularity. So, for instance, if a source column with date and time granularity is mapped to the "From Date" column and Days is selected, then only one record (the most recently updated) will be inserted at the end of the day.

-

- When updating a non-null data warehouse column with a null value:

-

Do not change the target value: Select this to keep values unchanged between two mappings for the same record. For instance, if the same record exists in two different source tables (A and B), but the record in Table A has a null value for data that is present in Table B (e.g. ZIP Code). In this case, if the record in Table A arrives after the record in Table B, the target value will be set to null. Selecting this option will prevent such an occurrence.

Information noteWhen creating a new project, the default behavior is to write NULL instead of keeping the values unchanged between the two mappings.

-

Set the target value to null: Select this if you want the source and target values to correspond. This can be useful, for example, when a person moves address and one of the column values (e.g. "State") changes to null.

Information noteWhen ingesting changes from an Oracle source, this option requires full supplemental logging for all source table columns that exist on the target and any source columns referenced in filters, data quality rules, lookups, and expressions.

-

Advanced Tab

In the Advanced tab, the following settings are available:

- Sequential Processing: Select this option if you want all the data warehouse tasks to run sequentially, even if they can be run in parallel. This may be useful for debugging or profiling, but it may also affect performance.

-

Maximum number of database connections: Enter the maximum number of connections allowed. The default size is 10.

For more information, see Determining the required number of database connections.

-

JVM memory settings: Edit the memory for the java virtual machine (JVM) if you experience performance issues. Xms is the minimum memory; Xmx is the maximum memory. The JVM starts running with the Xms value and can use up to the Xmx value.

Information noteOnly the following characters are supported (shown as a regular expression):

/^[-a-zA-Z0-9:]*$/

- Position in default workflow: Select where you want the data warehouse tasks to appear in the default workflow. For more information on workflows, see Workflows.

-

Optimize for initial load: Optimizes initial load in certain cases. Only select this option if the source tables do not reference missing records, use lookups, map different source records to the same record, do not contain Type 1 self-references, or contain historical records. Note also that when this option is selected, the following features are not supported:

- Data quality rules

- Derived attributes

- Consolidation of uniform sources (see Consolidation below)

- The Handle duplicates option

In the event that the task is used for incremental loading (using query-based change processing), clear the check box after the initial load task completes and regenerate the task.

-

Write task statement duration to the TLOG_PROCLOG table in the data warehouse: This option is useful for troubleshooting performance issues with ETL processes as it records the duration of each task statement in a special table (named TLOG_PROCLOG) in the data warehouse. You can then use this information to locate task statements with abnormal duration times and modify them accordingly.

-

Do not create indexes for data warehouse tables: During the task, Compose creates an internal index for each of the Data Warehouse tables (for query optimization). When running several consecutive tasks (e.g. via a workflow) with a large volume of tables, this process can be extremely time-consuming. In such a scenario, best practice is to select the check box for each of the tasks, except the last one.

- Do not truncate staging tables: Select this option if you want the ETL process to preserve the staging tables. Only use for debugging.

- Stop processing after populating the staging tables: Select if you do not want to proceed to populating the warehouse. Only use for debugging.

-

Do not drop temporary tables: Select this option if you want to keep the temporary tables created during the ETL process. Only use for debugging.

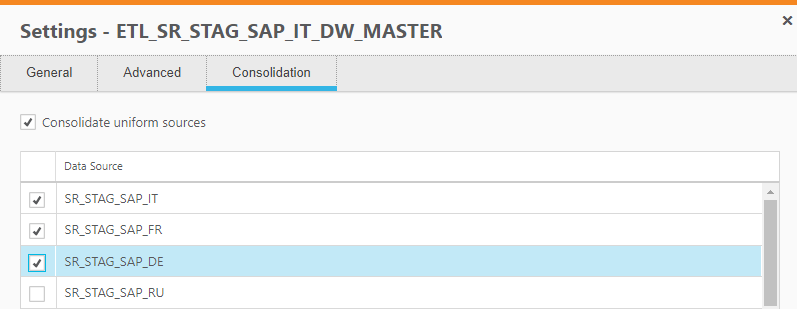

Consolidation Tab

Requires Compose August 2021 Service Release 03 or later.

When the Consolidate uniform sources option is enabled, Compose will read from the selected data sources and write the data to one consolidated entity. This is especially useful if your source data is managed across several databases with the same structure, as instead of having to define multiple data warehouse tasks (one for each source), you only need to define a single task that consolidates the data from the selected data sources.

The list of selectable data sources reflects the list of Source Databases that appears in the Databases panel in Designer view.

To facilitate downstream processing, you might want to add a record identifier column (for example, SourceID) to the primary key of all your entities. However, if one entity references another (for example, Orders → Customers), a naming conflict will arise as the new column (SourceID) will then appear in the referencing entity (Orders) twice. To prevent such conflicts from occurring, you should add the column to each entity with a unique prefix derived from the entity name. So, continuing with our Orders → Customers relationship example, the column name in the Orders entity should be orders_SourceID while the column name in the Customers entity should be customers_SourceID.

Prerequisites

- The structure of the tables in the selected sources must be identical.

-

Source type can be Table or View, but not Query.

Information noteThe source data does not have to reside in tables only or in views only; it can be ingested from a combination of views and tables. For example, the source data might be ingested from tables A, B, and C in Landing 1, and views A, B, and C in Landing 2.

See also: Editing column mappings.

Limitations and considerations

-

The Optimize for initial load option is not supported with consolidation.

-

A selected data source cannot contain an asterisk (*) in its specified schema name (asterisks in schema names are supported with Microsoft SQL Server only).

See also: Using Microsoft SQL Server as a source

-

If you have existing Full Load and Change Tables (CDC) tasks, setting the consolidation settings for the Full Load task will not automatically set the consolidation settings for the Change Tables task as well. You need to do this manually.

See also:Adding and duplicating tasks and Creating a Change Processing task

-

Uniform consolidation settings will not be included in task settings that are exported to a CSV file.

See also: Migrating objects as CSV files

-

Lineage and project documentation will not reflect all of the selected sources.

See also: Exporting project documentation and Lineage and impact analysis.

-

Custom ETLs (Pre Loading ETL, Multi Table ETL, Single Table ETL, and Post Loading ETL) will run only once, regardless of how many sources are selected.

See also: Creating and managing custom ETLs.

-

Generating the ETLs will only validate the Landing Zone database(s) defined in the mappings, and not all of the data sources selected in the Consolidation tab.

-

Error marts will be created for each Landing Zone database.

To see the number of reported rows in each error mart:

- Open the Manage Data Warehouse Tasks window, and select the consolidation task in the left pane.

- Select the Monitor tab, and click the Total Reported Rows number.

Alternatively:

- Switch to the main Monitor view and select the consolidation task.

- In the Progress Status tab (below the tasks list), click the Total Reported Rows number.

The Error Mart - <task-name> window opens.

Error Mart window showing the number of rows reported for each error mart

For more information on error marts, see Viewing information in the monitor.

Monitoring tasks with consolidates sources

The monitor shows the sum total of all the records (for example, the total number of INSERTs) from all of the selected sources.